TLDR

If you are using SQL, you will eventually come across the GROUP BY and PARTITION BY clauses. While the Group BY clause is fairly standard in SQL, most people do not understand when to use the PARTITION BY clause. This easy to understand video uses some NBA season data to make the point very clear! I will show you the best use case of when to apply PARTITION BY.

Bonus content begins at the 10:03 mark, where I demonstrate a use case for the fundamentals I teach you earlier in the video.

The reviews are in, make sure to watch the whole video!

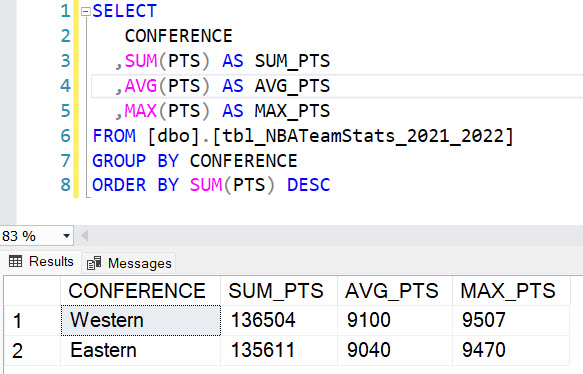

GROUP BY EXAMPLE

To begin, I demonstrate how to use GROUP BY in SQL Server Express to understand basic NBA team statistics based strictly upon the conference. I have to tell the database what to GROUP BY to generate all of the aggregate statistics. I select the conference and want to generate the aggregate sum of points, average points, and max points. I also order the results by the sum of points in a descending fashion.

When I run the query, the results show how the data points have been grouped by conference. The results show the sum of points by the two values in the conference field (Western and Eastern), the average points, and the max points. I can also see that there are no individual rows in this result, which is expected when using the GROUP BY clause with aggregate functions like MIN, MAX, SUM, and COUNT.

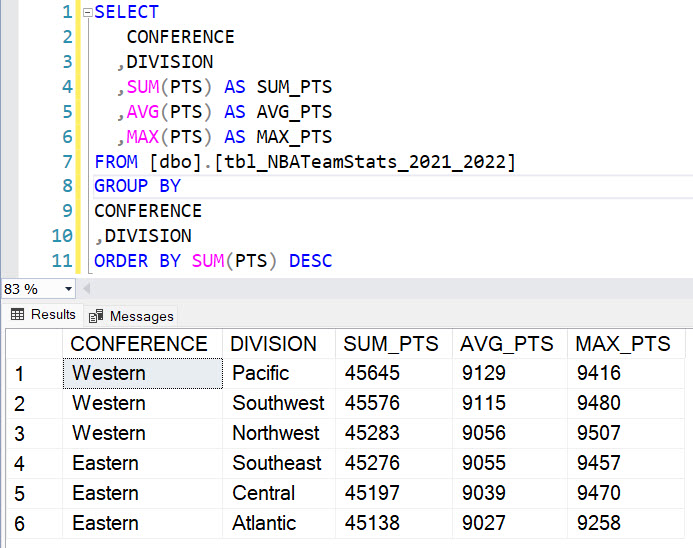

If I want to further break down the results and throw in a division, I need to also select the division field and add it to the GROUP BY statement as well. This action further slices the data points into specific conference and divisions that the teams play in.

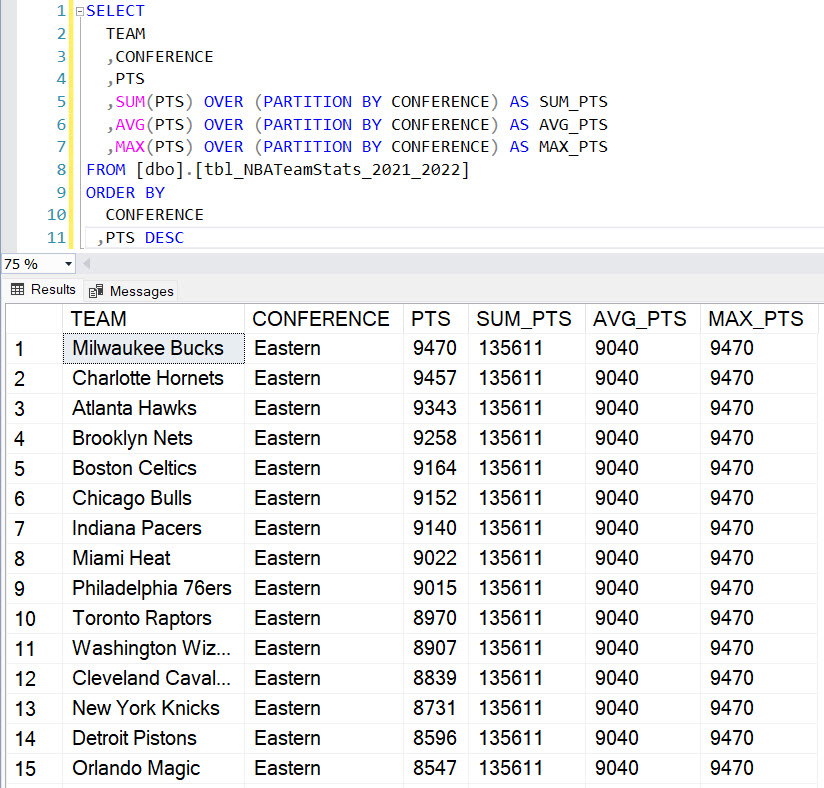

PARTITION BY

Now, let’s talk about the OVER and PARTITION BY sub-clause. In this next example, I select the conference and bring in the points, which is our measure. Just like with GROUP BY, I sum the points, but I add “OVER” and the sub-clause “PARTITION BY.” This is where the magic happens because I tell SQL what data point to Partition by (i.e., conference) to show the total sum of points by conference.

When I run the query, the results show the sum of points by conference, and I can order the results by individual team points in descending order . The results show a breakdown of the sum of points by conference, but there are still individual rows in the results.

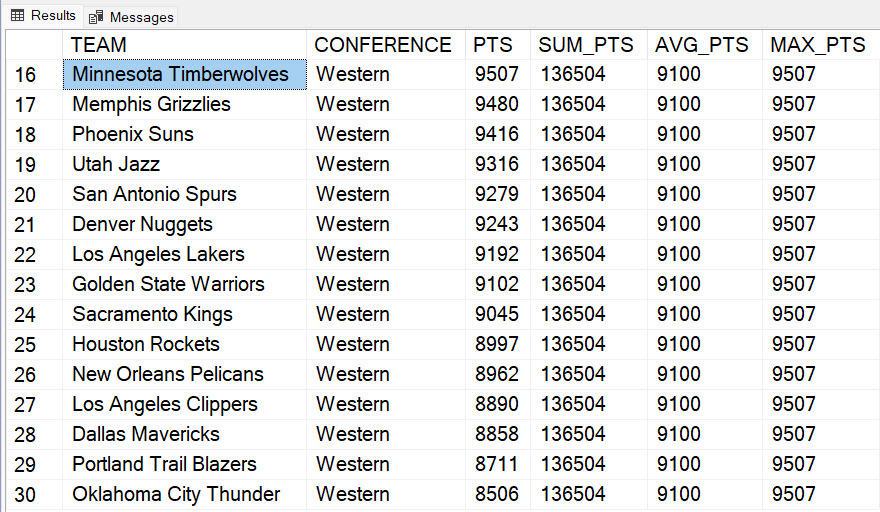

Here is a continuation of the same results for the Western conference teams:

This is an important distinction!! By using OVER and PARTITION BY, I can have data at the most granular level (unaggregated points at an individual team level i.e., the PTS field) combined with data at a higher granularity (points summed, averaged and the maximum points scored at an overall conference level).

The higher granularity of the data at the conference level makes the values for the last three statistics columns repeat (i.e., 135611 for SUM_PTS, 9040 for AVG_PTS and 9470 for MAX_PTS in the Eastern Conference). Similar data is returned for the Western conference.

I’m essentially allowed to have my data cake and eat it too with this best of both worlds approach!

IN SUMMARY

The GROUP BY statement is used to group rows that have the same values in a specific column or set of columns. When used with aggregate functions such as SUM, AVG, MAX, MIN, COUNT, etc., the GROUP BY statement allows us to calculate summary statistics for each group. The result will yield one row for each group. Typically, a GROUP BY statement will reduce the number of rows returned by your SQL.

On the other hand, the PARTITION BY statement is used to divide the data into partitions or subsets based on a specific column or set of columns (like conference in our case). Unlike GROUP BY, PARTITION BY does not reduce the number of rows returned in the result set. Instead, it adds a new column that shows the result of the aggregate function (e.g., SUM, AVG, MAX, etc.) for each partition.

LET PAT BEV COOK

So remember, when it comes to GROUP BY and PARTITION BY in SQL, just like how the Minnesota Timberwolves balanced an effective array of shots to lead the league in total points scored, understanding the nuances of when to use each statement can make all the difference in winning that crucial play-in game against your data! Yes you have to watch the video to understand this reference.

Also, I’m not bad at Pat Bev for going over the top, as this win was against a former employer who recently traded him. Success is always the best revenge!!

Happy querying!!

I appreciate everyone who has supported this blog and my YouTube channel via merch. Please check out the logo shop here.

Stay in contact with me through my various social media presences.

Thank you!!

Anthony B Smoak